KVM on ARM Performance

In order for a hypervisor to be of relevant importance and up to par with current trends, high performance and efficiency is among the most important factors. KVM on ARM has been incorporated to upstream Linux since version 3.9 demonstrating low virtualization overhead. Virtual Open Systems has been active in profiling KVM on ARM through continuous evaluation on various hardware platforms (VExpress, Arndale, Omap5 uEVM, Chromebook) and implementing micro benchmarks to identify and further enhance the hypervisor capabilities.

The company provides services for assessing virtualization performance on custom heterogeneous ARMv7/ARMv8 platforms and develop optimization techniques for selected use cases. The showcased results were performed on the Versatile Express (Cortex-A15 TC2) development platform and Exynos5250 Chromebook laptop.

Benchmarking CPU and Memory bound workloads

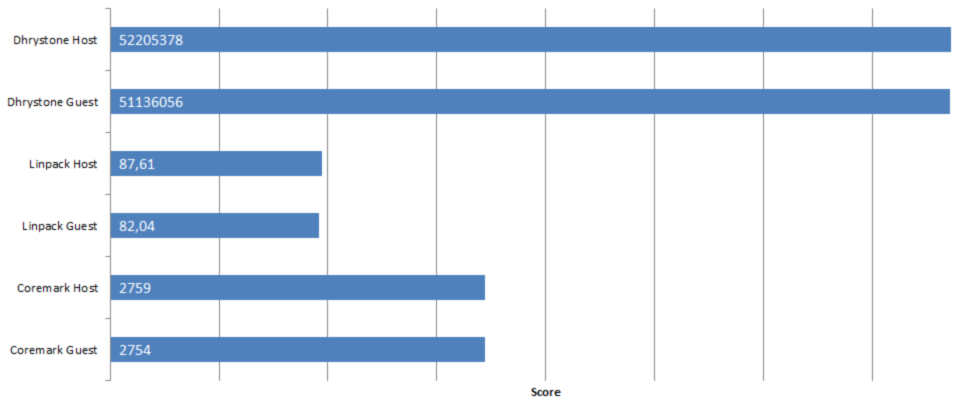

For CPU stress tests and benchmarks that do not rely on I/O, KVM on ARM shows the benefits of full virtualization. KVM excels on such workloads with performance being near native, well below the standard 10% that is expected. Below you can see a comparison between the host/guest system under different benchmark utilities.

Comparison of host/guest scores on various CPU stress tests (higher is better)

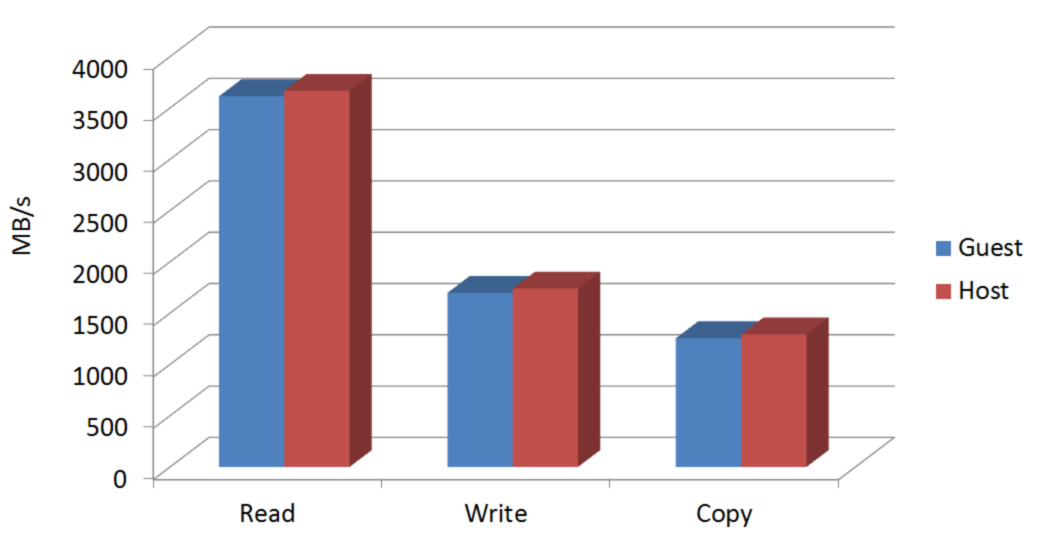

Memory handling is a major advantage of KVM on ARM which relies on the well known Linux infrastructure. By using lmbench3 tool for memory bandwidth measurements, we can see the same positive results as for CPU workloads.

Comparison of host/guest memory bandwidth (higher is better)

I/O Virtualization Performance - Emulation vs Paravirtualization

There are various ways to handle I/O in virtualization, and depending on the complexity of the virtualized device, as well as the constraints of the selected use case, different approaches and compromises are needed. The most prominent options are emulation or paravirtualization (another option being direct device assignment).

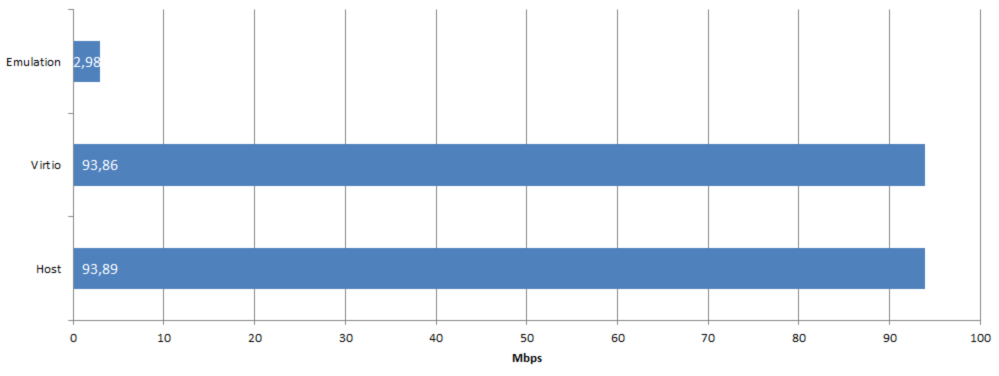

Emulation is a considerable overhead in virtualization and used only in the simplest of cases. Paravirtualization, can instead significantly accelerate I/O in guest systems. By utilizing Virtio, KVM can achieve excellent performance and keep I/O related bottlenecks to a minimum. In the case of networking we can compare host/guest bandwidth using netperf:

Networking performance of an emulated network interface against Virtio and the host (higher is better)

Similar behaviour can be observed with other workloads as well, such as disk I/O, in which emulation can impact performance severely, where Virtio can achieve near native performance. In addition vHost-net can further improve networking performance in virtualized systems for use cases demanding higher bandwidth (1Gbps or more).

From this overview we can conclude, that by utilizing the full virtualization extensions of newer ARM architectures, coupled with paravirtualization mechanisms to accelerate I/O, KVM excels in the performance department.

- Virtual bfq

- Vosyshmem zerocopy

- Vosysmonitor

- Api remoting

- Vosyswitch nfv virtual switch

- Accelerators virtualization interface

- Vosysmonitor jp 日本語

- Vosysmonitorx86 jp 日本語

- Vosysiot edge

- Vfpgamanager

- Vosysvirtualnet

- Vosysmcs

- Vosystrustedvim

- Vosysmonitor sossl framework

- Vosysmonitorx86

- Vosysmonitorv risc v

- Vosysmonitorv risc v jp 日本語

- vosysvirtualnet jp 日本語

- Vosyszator

- Vmanager

VOSySofficial

VOSySofficial